Tech

These are the Most Important Computer Science Discoveries in 2023

More than ever before, artificial intelligence was able to produce text and art, and computer scientists created algorithms to solve persistent issues. Artificial intelligence permeated popular culture in 2023, appearing in everything from Senate hearings to internet memes. Even though researchers were still having difficulty cracking open the “black box” that explains the inner workings of large language models like the ones behind ChatGPT, a lot of this excitement was generated by these models. The artistic abilities of image generation systems also frequently impressed and unsettled us, but these were explicitly based on ideas taken from physics.

Numerous other advancements in computer science were made in the year. One of the field’s oldest problems, “P versus NP,” or what constitutes a hard problem, saw some minor but significant advancements from researchers. This classic issue and the efforts of computational complexity theorists to respond to the question, “Why is it hard (in a precise, quantitative sense) to understand what makes hard problems hard?” were examined in August by my colleague Ben Brubaker. “It hasn’t been an easy journey — the path is littered with false turns and roadblocks, and it loops back on itself again and again,” Brubaker wrote. “Yet for meta-complexity researchers, that journey into an uncharted landscape is its own reward.”

The year also held a lot of smaller-scale, but no less significant, personal development. After almost thirty years, Shor’s algorithm—the long-promised quantum computing game-changer—got its first major update. It has finally been possible for researchers to find the shortest path through a general kind of network almost as quickly as it is theoretically possible. Additionally, cryptographers demonstrated how machine learning models and machine-generated content must also deal with hidden vulnerabilities and messages, making an unexpected connection to artificial intelligence.

It appears that some issues are still beyond our current capacity to resolve.

Hard Questions, Hard Answers

Computer scientists have been attempting to resolve “P versus NP,” the largest unanswered question in their field, for half a century. In general, it asks how difficult a given set of hard problems is. And their efforts have been fruitless for the past fifty years. Frequently, when they were starting to make headway with a novel strategy, they encountered an obstacle that demonstrated the strategy’s impossibility. They eventually started to question why it’s so difficult to demonstrate that some issues are difficult. Their attempts to address these introspective queries have given rise to a subfield known as meta-complexity, which has yielded the most profound understanding of the data query.

The Powers of Large Language Models

When enough things come together, you might be taken aback by the results. Unconscious atoms unite into life, flocks of birds soar and swoop together, and water molecules create waves. These are known as “emergent behaviors” by scientists, and they have recently observed the same phenomenon with large language models — AI systems trained on massive text datasets to generate writing that resembles a human’s. These models can suddenly do unexpected things, like solving specific math problems, that smaller models cannot once they reach a certain size.

However, new issues have been brought up by the flurry of interest in large language models. These programs fail to address even some of the most basic aspects of human language, fabricate lies, and propagate social prejudices. Furthermore, these programs continue to be a mystery, with no idea of their inner workings, despite suggestions from certain researchers to alter that.

Solving Negativity

For a considerable time, computer scientists have been aware of algorithms that can quickly traverse graphs, which are networks of nodes connected by edges, in which some connections are expensive, such as a toll road that connects two cities. However, they were unable to develop a quick algorithm for figuring out the shortest path when a road could be rewarding or costly for decades. Three researchers published a feasible algorithm that is almost as fast as theoretically feasible towards the end of the previous year.

Subsequently, in March, scientists published a new algorithm that can precisely identify when two kinds of mathematical objects known as groups are the same; the work may pave the way for algorithms that can compare groups (and possibly other objects) more quickly, which is a surprisingly challenging task. The debunking of a long-standing conjecture regarding the performance of information-limited algorithms, a novel method of computing prime numbers by combining deterministic and random techniques, and analysis demonstrating how an unconventional concept can enhance the performance of gradient descent algorithms—which are widely used in machine learning programs and other domains—were among the year’s other notable algorithmic stories.

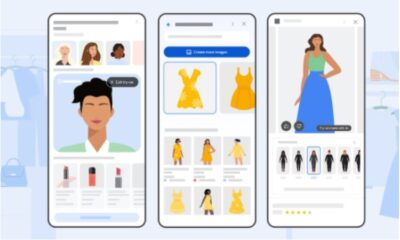

Appreciating AI Art

This year, image-generating tools such as DALL·E 2 have become extremely popular. Give them a written prompt, and they will produce an artistic tableau that represents your request. However, the work that enabled the majority of these artificial artists had been in the works for a long time. These so-called diffusion models, which are based on physics principles that describe spreading fluids, efficiently figure out how to unscramble formless noise into a distinct image. It’s like going back in time to a cup of coffee and watching the uniformly distributed cream reconstitute into a distinct dollop.

The fidelity of current images has also been successfully increased by AI tools, though this is still far from the TV cliche of a police officer yelling “Enhance!” More recently, to investigate novel methods for machines to produce images, researchers have shifted their focus from diffusion to physical processes. A more recent method that uses the Poisson equation, which describes how electric forces change with distance, has already been shown to be more error-tolerant and, in certain situations, simpler to train than diffusion models.

Enhancing the Quantum Standard

Shor’s algorithm has served as a model of quantum computing power for many years. This set of instructions, created in 1994 by Peter Shor, enables a device to break large numbers into their prime factors far faster than a standard, classical computer by taking advantage of quantum physics’ peculiarities. This could potentially destroy most of the internet’s security measures. The first noteworthy advancement in Shor’s algorithm since its creation was made in August when a computer scientist created an even faster version of the algorithm. “I would have thought that any algorithm that worked with this basic outline would be doomed,” Shor said. “But I was wrong.”

However, useful quantum computers are still far off. Tiny mistakes in real life can quickly add up, causing computations to be ruined and any quantum benefits to be lost. A group of computer scientists demonstrated late last year that a classical algorithm performs about as well as a quantum algorithm that incorporates errors for a particular problem. However, there is still hope: Research conducted in August revealed that some error-correcting codes—also referred to as low-density parity-check codes—are at least ten times more effective than the norm.

Hiding Secrets in AI

In a surprising discovery at the nexus of cryptography and AI, a group of computer scientists demonstrated that it was feasible to introduce virtually undetectable backdoors into machine learning models, with their invisibility supported by the same reasoning as the most effective contemporary encryption techniques. It’s unclear if the same applies to the more complex models that underpin most of the AI technology today because the researchers concentrated on relatively simple models. The results do, however, point to ways that future systems might protect against these kinds of security flaws and also indicate that there is a renewed interest in the ways that the two domains can support one another’s development.

Because of these kinds of security concerns, researchers like Yael Tauman Kalai have advanced our ideas of security and privacy even in the face of impending quantum technology. Cynthia Rudin has championed the use of interpretable models to understand better what’s happening inside machine learning algorithms. Additionally, a finding in the closely related field of steganography demonstrated how to completely secure messages hiding in machine-generated media.

Vector-Driven AI

Even though artificial intelligence (AI) has grown immensely powerful, the majority of modern systems still rely on artificial neural networks, which have two major drawbacks: they are very expensive to operate and train, and they can easily turn into mysterious black boxes. Numerous researchers contend that it might be time to try a different strategy. Artificial intelligence (AI) systems could represent concepts with infinite variations of hyperdimensional vectors, or arrays of thousands of numbers, rather than using artificial neurons that detect specific traits or characteristics.

This system allows researchers to work directly with the concepts and relationships these models consider, providing them with greater insight into the reasoning behind the model. Its computations are also far more efficient due to its greater versatility and improved error-handling capabilities. Although hyperdimensional computing is still in its early stages, we might see the new strategy begin to gain traction as it is put to greater tests.

-

Gadget4 weeks ago

Gadget4 weeks agoAfter Grand Success on BLDC Ceiling Fan, Eff4 Is Launching Smart Bulb

-

Festivals & Events4 weeks ago

Festivals & Events4 weeks agoGoogle Celebrates Cherry Blossom Season with Animated Doodle

-

Business2 weeks ago

Business2 weeks agoPrakash and Kamal Hinduja: Driving Social and Environmental Change

-

Education3 weeks ago

Fred DuVal: University Leadership as a Critical Resource for Climate Change Research and Life-Saving Solutions

-

Health2 weeks ago

Health2 weeks agoThe Hinduja Brothers Commitment to Global Health: Empowering Communities Across Borders

-

Cryptocurrency3 weeks ago

Cryptocurrency3 weeks agoDesigned For The Masses: How Akasha (AK1111) Is Unlocking Crypto For The Next Billion Users

-

Cryptocurrency3 weeks ago

Cryptocurrency3 weeks agoNexaglobal & Future World Token (FWT): Could This Be the Next Big Crypto Investment of 2025?

-

Sports4 weeks ago

Sports4 weeks agoWomen’s NCAA Tournament 2025 Sweet 16: Full Schedule, Fixtures, Teams, Bracket, and How to Watch March Madness Basketball Match Live