Tech

Google AI research designs for an easy robotic real dog trot

As fit as robots seems to be, the original creatures after which they will, in general, be designed are in every case a whole lot better. That is partly because it’s hard to figure out how to walk like a dog directly from a dog — yet this research from Google AI labs make it impressively simpler.

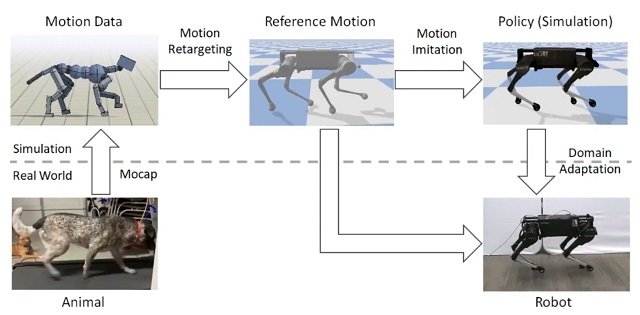

The objective of this research, a collaboration with UC Berkeley, was to figure out how to efficiently and automatically transfer “agile behaviors” like a light-footed trot or turn from their source (a great dog) to a quadrupedal robot. This kind of thing has been done previously, yet as the analysts’ blog post calls attention to, the setup training procedure can regularly “require a great deal of expert insight, and often involves a lengthy reward tuning process for each desired skill.”

That doesn’t scale well, naturally, however, that manual tuning is important to ensure the animal’s movements are approximated well by the robot. Indeed, even an extremely doglike robot isn’t really a dog and how a dog moves may not be actually how the robot should, leading the latter to fall, lock up or otherwise fail.

The Google AI project tends to this by including a bit of controlled chaos to the normal order of things. Normally, the dog’s movements would be captured and key points like feet and joints would be deliberately followed. These points would be approximated to the robot in a digital simulation, where a virtual version of the robot endeavors to emulate the movements of the dog with its own, learning as it goes.

Everything looks OK, however, the real issue comes when you attempt to use the consequences of that simulation to control a real robot. This real-world is certainly not a 2D plane with idealized friction rules and all that. Tragically, that implies that uncorrected simulation-based gaits will in general walk a robot directly into the ground.

To forestall this, the specialists acquainted an element of randomness to the physical parameters used in the simulation, causing the virtual robot to gauge more, or have more vulnerable motors, or experience greater friction with the ground. This made the machine learning model depicting how to walk need to represent a wide range of small changes and the complications they make down the line — and how to counteract them.

Figuring out how to oblige for that randomness made the learned walking strategy far more robust in reality, prompting a passable impersonation of the target dog walk, and significantly increasingly complicated moves like turns and spins, with no manual intervention and just some extra virtual training.

Naturally, manual tweaking could even now be added to the mix whenever wanted, however as it stands this is a huge improvement over what should already be possible absolutely automatically.

In another research project depicted in a similar post, another set of specialists portray a robot teaching itself to walk all alone, however, instilled with the intelligence to abstain from walking outside its assigned zone and to get itself when it falls. With those essential abilities baked in, the robot had the option to saunter around its training territory persistently with no human intervention, adapting very good movement skills.

-

Business3 weeks ago

Business3 weeks agoPrakash and Kamal Hinduja: Driving Social and Environmental Change

-

Startup2 days ago

Startup2 days agoSmall Business Month Encourages Entrepreneurs to Take Stock and Scale Up with Actionable Marketing Strategies

-

Cryptocurrency4 weeks ago

Cryptocurrency4 weeks agoDesigned For The Masses: How Akasha (AK1111) Is Unlocking Crypto For The Next Billion Users

-

Health3 weeks ago

Health3 weeks agoThe Hinduja Brothers Commitment to Global Health: Empowering Communities Across Borders

-

Startup2 weeks ago

Startup2 weeks agoCost-Saving Strategies Every Small Business Owner Should Know to Boost Efficiency

-

Startup4 weeks ago

Startup4 weeks agoMatthew Denegre on the Art of Deal Sourcing: Finding the Right Investment Opportunities

-

Health2 weeks ago

Health2 weeks agoSt. John’s Community Health Examines Innovations in Pharmacy Access

-

Tech3 weeks ago

Tech3 weeks agoZoom Launches AI-Powered Zoom Tasks, A Smart New Tool for Task and Project Management